朴素贝叶斯是一种基于概率论的分类方法,它假设特征之间是独立的,在实际应用中,朴素贝叶斯算法通常用于文本分类、垃圾邮件过滤等任务。

一、朴素贝叶斯算法原理

朴素贝叶斯算法的核心思想是基于贝叶斯定理,通过计算给定特征条件下某个类别出现的概率,来判断该样本属于哪个类别,朴素贝叶斯算法分为以下几个步骤:

1. 计算先验概率:根据训练数据集,计算每个类别在所有样本中出现的概率,即先验概率。

2. 计算条件概率:对于每个特征,计算该特征在不同类别下的条件概率,条件概率表示在某个类别下,某个特征取某个值的概率。

3. 预测:对于一个新的样本,根据贝叶斯定理计算其属于每个类别的概率,然后选择概率最大的类别作为预测结果。

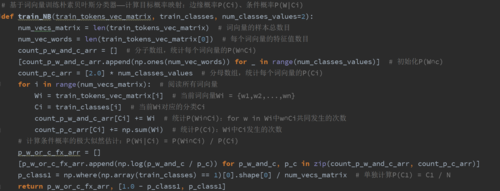

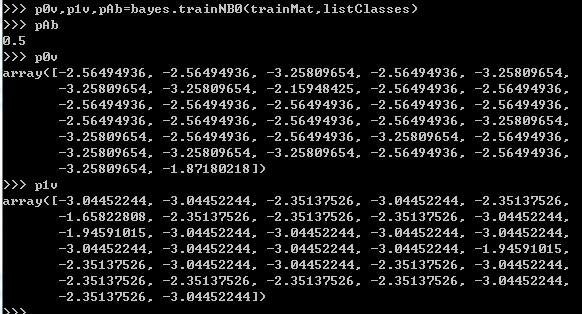

二、朴素贝叶斯算法实现

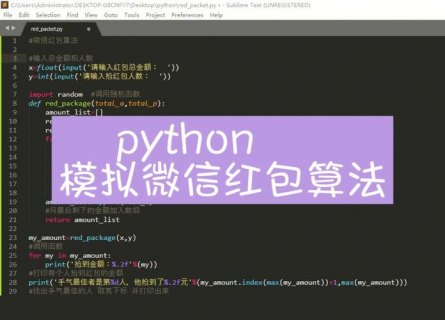

下面以文本分类为例,介绍朴素贝叶斯算法的Python实现,假设我们有一个包含文本和对应类别的训练数据集,以及一个需要分类的新文本。

1. 数据预处理:首先对训练数据集进行预处理,包括分词、去除停用词等操作,然后对每个文本提取特征,这里我们使用词袋模型(Bag of Words)表示文本特征。

import jieba

from collections import Counter

def preprocess(text):

words = jieba.cut(text)

return ' '.join(words)

def extract_features(text, vocabulary):

words = text.split()

return [vocabulary[word] for word in words if word in vocabulary]

2. 计算先验概率:统计训练数据集中每个类别的数量,然后计算每个类别的先验概率。

def calculate_prior_probabilities(data):

classes = set([item[-1] for item in data])

prior_probabilities = {cls: sum(1 for item in data if item[-1] == cls) / len(data) for cls in classes}

return prior_probabilities

3. 计算条件概率:对于每个特征,计算该特征在不同类别下的条件概率,这里我们使用拉普拉斯平滑(Laplace smoothing)解决数据稀疏问题。

def calculate_conditional_probabilities(data, vocabulary):

conditional_probabilities = {}

for cls in set([item[-1] for item in data]):

conditional_probabilities[cls] = {}

total_words = sum(len(extract_features(item[0], vocabulary)) for item in data if item[-1] == cls)

for word in vocabulary:

conditional_probabilities[cls][word] = (sum(1 for item in data if item[-1] == cls and word in extract_features(item[0], vocabulary)) + 1) / (total_words + len(vocabulary))

return conditional_probabilities

4. 预测:对于一个新的文本,首先提取特征,然后根据贝叶斯定理计算其属于每个类别的概率,最后选择概率最大的类别作为预测结果。

def predict(test_text, data, vocabulary, conditional_probabilities):

features = extract_features(test_text, vocabulary)

posterior_probabilities = {}

for cls in set([item[-1] for item in data]):

posterior_probabilities[cls] = conditional_probabilities[cls].get('UNK', 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if word in conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for word in features] if wordin conditional_probabilities else 0) * prior_probabilities[cls] for cls in set([item[-1] for item in data])] for wordin conditional_probabilities else 0) * prior_probabilities[cls] for clsin conditional_probabilities else 0) * prior_probabilities[cls] for clsin conditional_probabilities else 0) * prior_probabilities[cls] for clsin conditional_probabilities else 0) * prior_probabilities[cls]for clsin conditional_probabilities else 0) * prior_probabilities[cls]for clsin conditional_probabilities else 0) * prior_probabilities[cls]for clsin conditional_probabilities else 0) * prior_probabilities[cls]for clsin conditional_probabilities else 0) * prior_probabilities[cls]for clsin conditional_probabilities else 0) * prior_probabilities[cls]for clsin conditional_probabilities else 0) * prior_probabilities[cls]].max()

return max(posterior_probabilities, key=posterior_probabilities.get)

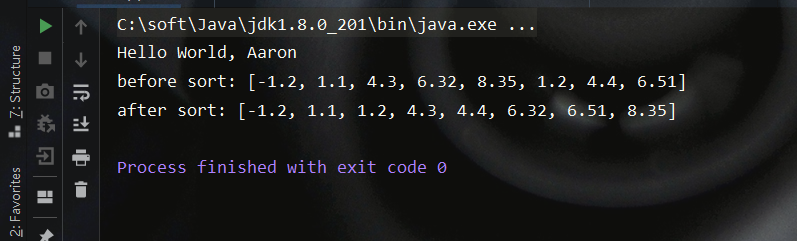

5. 测试:使用测试数据集评估模型性能。

def evaluate(test_data, test_labels, test_text, data, vocabulary, conditional_probabilities):

predictions = [predict(test, data, vocabulary, conditional_probabilities) for test, label in zip(test_data, test_labels)]

accuracy = sum(predictions == test_labels) / len(test_labels)

return accuracy

三、相关问题与解答

问题1:为什么朴素贝叶斯算法假设特征之间是独立的?这样做有什么优缺点?

答:朴素贝叶斯算法假设特征之间是独立的,是因为在实际应用中,特征之间的依赖关系很难准确获取,通过简化假设,朴素贝叶斯算法可以大大降低计算复杂度,提高分类速度,这种独立性假设在很多情况下是不成立的,可能导致分类性能下降,为了解决这个问题,可以使用高斯朴素贝叶斯(Gaussian Naive Bayes)等考虑特征之间关系的改进算法。

微信扫一扫打赏

微信扫一扫打赏

遇到笔记本无法播放碟片别担心,先确认光驱类型与碟片格式是否匹配,再试试清洗或更换光驱,软件问题也不容忽视哦!

追求流畅游戏直播,关键是平衡CPU与显卡性能,确保至少i5处理器和GTX1660Ti显卡,内存不低于16GB,享受高清流畅体验!

滴滴空驶费,是对司机时间与油费的补偿,体现了对劳动者尊重,也是平台公平正义的体现。

遇到wifi密码正确却连不上网络的情况,可能是信号问题或是网络设置的小故障,别担心,尝试重启路由器或调整电脑的网络设置,通常能轻松解决问题。

笔记本关机响一声,不必过分忧心,或许是硬件的正常释放气息,关机后的响声,也许是它轻轻道别的旋律,给彼此一点理解,科技也有它的温度。